HARRISBURG, PA—In a noteworthy move reflecting developing national concern, a bipartisan bunch of Pennsylvania state officials presented comprehensive enactment, House Charge 1925 (H.B. 1925), pointed at directing the quickly growing utilize of manufactured insights (AI) in the commonwealth’s healthcare division. The charge looks for to set up clear guardrails for AI’s application by guarantees, healing centers, and clinicians, centering on quiet security, straightforwardness, and minimizing algorithmic bias.

The enactment, reported on October 6th, comes as AI frameworks are progressively coordinates into about all features of the healthcare industry, from understanding care and diagnostics to charging, claims administration, and utilization surveys. Driven by state Rep. Arvind Venkat, D-Allegheny, an crisis pharmaceutical doctor, and bolstered by colleagues from both parties, the charge endeavors to strike a significant adjust between cultivating advancement and securing understanding well-being.

The Center of the Enactment: Ordering the “Human in the Loop”

The key arrangements of H.B. 1925 center on responsibility and transparency:

- Human Decision-Maker: The charge commands that a human decision-maker must make the extreme choice based on an individualized evaluation when AI is utilized by safeguards, clinics, or clinicians, especially in things of quiet care or restorative need. This arrangement is a coordinate reaction to concerns that over-reliance on mechanized frameworks might lead to unreasonable refusals of care or misdiagnoses.

- Straightforwardness and Revelation: Safeguards, clinics, and clinicians would be required to give straightforwardness to patients and the open with respect to how AI is being utilized in their hones and companies.

- Predisposition and Anti-Discrimination Confirmation: Substances utilizing AI would have to yield an authentication to the important state offices (Dad Office of Protections, Dad Division of Human Administrations, or Dad Division of Wellbeing) illustrating that inclination and separation, as of now precluded by state law, have been minimized in their AI systems.

“AI certainly has the capacity to upgrade all perspectives of human life, counting the wellbeing care space – but it ought to never supplant the mastery or judgment of wellbeing care clinicians,” said state Rep. Greg Scott (D-Montgomery), emphasizing the bill’s center on protecting human oversight.

Historical Setting: The Rise of State-Level Control ��️

Pennsylvania’s administrative activity is not happening in a vacuum; it is portion of a burgeoning national drift of states venturing in to control healthcare AI in the nonattendance of comprehensive government guidance.

For a long time, the utilize of AI in medication was basically administered by existing rules, such as those from the U.S. Nourishment and Sedate Organization (FDA) for Program as a Therapeutic Gadget (SaMD), or common persistent protection laws like HIPAA. Be that as it may, the rise of generative AI and its utilize in high-stakes ranges like protections claim dissents has quickened state action.

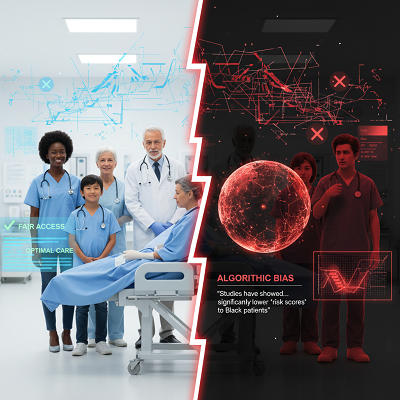

- The Issue of Inclination: The criticalness for control has been increased by recorded cases where AI calculations have strengthened or exacerbated existing wellbeing imbalances. Thinks about have appeared, for case, that calculations utilizing measurements like the taken a toll of care as a intermediary for a patient’s wellbeing needs can relegate essentially lower “hazard scores” to Dark patients with the same conditions as White patients, due to systemic under-spending on Dark quiet care. This algorithmic predisposition can lead to abberations in treatment and access.

- The Protections Struggle: A major catalyst for state-level payor direction has been open examination and indeed national class-action claims affirming that huge wellbeing safeguards have wrongfully utilized AI apparatuses to naturally deny claims, provoking lawmakers to order doctor association in restorative need determinations.

Pennsylvania joins states like California, Utah, and Colorado which have as of now passed laws centered on AI straightforwardness, anti-discrimination, and requiring human oversight in healthcare choices. The sheer volume of AI-related bills presented over the country—over 250 in 46 states this year alone—underscores a collective realization that existing systems are inadequately for this advancing technology.

Current Patterns and Master Conclusion: Adjusting Guardrails and Innovation

Experts concur that state-level activity is filling a administrative vacuum, but the challenge remains in making rules that secure patients without smothering innovation.

- Transparency and Responsibility: The center on straightforwardness is a center topic in current AI direction. As Dr. Michael Suk, AMA Board of Trustees Chair, has famous, the AMA’s vision incorporates a framework where AI progresses persistent encounter and results, but concerns stay that mechanized devices are “making mechanized choices without considering the subtleties of each person patient’s restorative conditions.”

- The ‘Human in the Loop’: The prerequisite for a human decision-maker, as codified in H.B. 1925, is a common and widely-supported administrative methodology. This “human in the circle” approach is seen as a fundamental defend, particularly for significant choices like determination or denying scope. As a nurture and co-sponsor, Rep. Bridget Kosierowski (D-Lackawanna), expressed, “We require experienced specialists and medical attendants indeed more presently to evaluate the exactness of AI to guarantee that inclination and separation haven’t impacted its findings.”

- Risk-Based Systems: A few lawful and specialized specialists advocate for a risk-based system for direction, meaning that AI instruments posturing a higher hazard to patients (e.g., independent surgery) ought to confront more thorough controls and investigation than lower-risk applications (e.g., authoritative chatbots or encompassing scribing). The Pennsylvania charge shows up to incline toward this concept by focusing on AI utilize in “individualized understanding evaluations” and choices by insurers.

Implications for Pennsylvania’s Healthcare Future

If passed, H.B. 1925 would have noteworthy suggestions for all healthcare substances in Pennsylvania:

- For Patients: The charge guarantees more prominent certainty and security. Patients would be more likely to know when AI affected their care choices and may be guaranteed that a authorized proficient surveyed any major AI-aided result, especially protections denials.

- For Safeguards and Healing centers: Compliance would require a major regulatory exertion. These substances would require to review their AI frameworks for inclination, set up clear revelation conventions, and rebuild workflows to guarantee a doctor or clinician keeps up last decision-making specialist over AI proposals. Disappointment to do so seem open them up to unused roads of lawful liability.

- For Advancement: The enactment must explore the sensitive landscape of not over-regulating unused innovation. Whereas the unused rules give vital guardrails, AI designers and healthcare frameworks will require to guarantee that compliance necessities do not incidentally moderate down the selection of useful AI applications that move forward proficiency and offer assistance address the developing healthcare laborer shortage.

Referred to the State House Communications & Innovation Committee, H.B. 1925 speaks to a basic step for Pennsylvania, reflecting a statewide commitment to moral innovation utilize and patient-centered care in the age of manufactured intelligence.

1 Comment

good