The guarantee of Manufactured Insights (AI) to revolutionize healthcare—from quickening sedate disclosure to giving more precise, personalized diagnostics—is colossal. However, this change is predicated on one principal and profoundly touchy asset: quiet information. As AI frameworks ingest endless amounts of Electronic Wellbeing Records (EHRs), restorative pictures, and hereditary arrangements, the basic to guarantee strong information security is not only a administrative jump but a column of open trust.

A breach of Secured Wellbeing Data (PHI) is more than a specialized disappointment; it’s a coordinate risk to persistent independence, money related solidness, and legitimate standing for healthcare suppliers. The industry, truly a prime target for cybercriminals due to the tall esteem of restorative information, must presently adjust advancement with iron-clad security. This article investigates five data-secure approaches that are as of now characterizing the capable integration of AI into medicine.

Background & Authentic Setting: The Data-Privacy Reckoning

The marriage of innovation and medication is not unused, but the exponential development of information and AI’s capabilities marks a particular time. The chronicled setting of information protection in medication is established in foundational controls like the Wellbeing Protections Transportability and Responsibility Act (HIPAA) of 1996 in the U.S. and the Common Information Assurance Control (GDPR) in Europe. These systems were set up long some time recently the standard appropriation of large-scale Machine Learning (ML) models.

The early 2010s saw major activities like IBM Watson enter the healthcare space, illustrating AI’s potential to analyze complex therapeutic writing and persistent information. In any case, the information starvation of these frameworks quickly raised alert chimes. The challenge moved from how to utilize the information to how to utilize the information securely and morally. Specialists started to push that basically “de-identifying” data—a common hone to evacuate coordinate identifiers like names—was no longer adequate, as modern AI seem possibly re-identify people by cross-referencing apparently mysterious data. This driven to a basic realization: security required to be secured not fair around the information, but inside the AI demonstrate itself.

Current Patterns: The Five Columns of Data-Secure AI

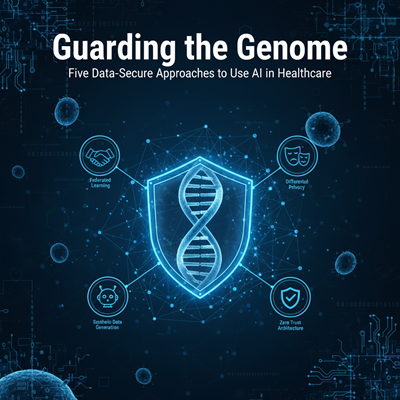

Today, the most imaginative and secure techniques center on minimizing the introduction of crude, touchy information whereas maximizing the utility of its bits of knowledge. The taking after five approaches speak to the wilderness of data-secure AI in healthcare:

- Unified Learning (FL) 🤝

What it is: Unified Learning is a machine learning method that trains AI models on decentralized datasets. Instep of gathering all persistent information into one central cloud or server for preparing (a single, high-value target for programmers), the show voyages to the information. Person healing centers or clinics prepare their claim neighborhood duplicates of the AI demonstrate utilizing their neighborhood persistent information, and as it were the overhauled demonstrate parameters (the learned bits of knowledge) are sent back to a central server to be totaled into a last, worldwide model.

Security Advantage: This significantly diminishes the chance of a enormous information breach, as persistent records never take off the secure, neighborhood environment of the healthcare provider.

- Differential Security (DP) 🎭

What it is: Differential Security is a numerically thorough method that presents a carefully calculated sum of arbitrary “commotion” to a dataset or the comes about of a inquiry some time recently it’s discharged. The objective is to make it measurably outlandish to decide whether any single individual’s information was included in the calculation.

Security Advantage: It permits analysts to analyze patterns and designs over expansive populaces without compromising the protection of any single person. Indeed if an assailant has assistant data, they cannot certainly connect a particular individual to the information, viably ensuring against re-identification attacks.

- Homomorphic Encryption (HE) 🔒

What it is: Homomorphic Encryption is a shape of encryption that permits computations to be performed specifically on scrambled information. In basic terms, a third party (like a cloud AI benefit) can run an investigation on scrambled quiet information and create an scrambled result, which as it were the healthcare supplier can decrypt.

Security Advantage: The information remains scrambled all through its whole lifecycle—at rest, in travel, and amid computation. This is a game-changer, as it permits modern AI administrations to be utilized without ever requiring them to see the crude, decoded PHI.

- Manufactured Information Era (SDG) 🤖

What it is: Engineered Information Era employments Generative AI to make completely unused, fake quiet records that factually and clinically reflect the real-world dataset. This manufactured information keeps up the same measurable properties, relationships, and prescient control as the unique PHI but is not tied to any genuine individual.

Security Advantage: It gives a secure, completely de-identified “sandbox” for engineers to prepare and test their AI models without ever touching veritable persistent information, which is especially valuable for open sharing, inquire about, and collaborative development.

- Zero Believe Engineering (ZTA) 🛡️

What it is: ZTA is a present day cybersecurity system based on the rule of “never believe, continuously confirm.” Not at all like conventional security, which trusts anybody interior the arrange edge, ZTA requires strict personality confirmation and authorization for each single client, gadget, and framework endeavoring to get to any asset on the organize, notwithstanding of location.

Security Advantage: When connected to AI frameworks, it upholds layered get to controls, multi-factor verification, and ceaseless observing, guaranteeing that as it were the right individuals (or AI models) can get to delicate understanding information, minimizing the hazard from both outside assailants and insider threats.

Expert Suppositions: Adjusting Advancement and Prudence

Leading figures in wellbeing tech and administrative bodies push that a absolutely cautious approach to security is deficiently. The agreement is moving toward “Privacy-Preserving AI” as a plan principle.

Dr. Anya Singh, a chief AI officer at a major hospital system, notes, “The discussion has moved from ‘Can we do this?’ to ‘How do we planner this to be secure by default?’ Methods like Combined Learning are fundamental. We cannot inquire our patients to select between cutting-edge diagnostics and the security of their most individual data. Security has to be built in, not blasted on.”

Regulators, as well, are catching up. An official from a European data protection agency as of late commented, “The modern AI Act proposition recognize that common encryption isn’t sufficient. We must implement the utilize of strategies like Differential Security for high-risk AI applications in clinical settings to guarantee algorithmic results do not compromise the principal rights of the information subject.”

The center challenge remains the interoperability and standardization of these secure innovations. Healthcare educate, regularly burdened by bequest IT frameworks, confront critical specialized and budgetary obstructions to executing complex cryptographic strategies like Homomorphic Encryption.

Implications for the Future of Healthcare

The fruitful and secure appropriation of these approaches carries significant suggestions for the future of medicine:

Enhanced Quiet Results and Trust

Secure AI appropriation will quicken the advancement of personalized pharmaceutical. By permitting AI models to securely prepare on bigger, more differing datasets (by means of FL and SDG), they will gotten to be more exact, less one-sided, and more compelling at anticipating and diagnosing infections. Vitally, straightforward security measures will construct persistent believe, which is imperative for empowering data-sharing that powers these life-saving innovations.

Global Collaboration and Research

Privacy-preserving strategies will open entryways for exceptional worldwide inquire about collaboration. Analysts can pool bits of knowledge from persistent populaces over landmasses without ever uniting crude, directed understanding information, quickening the pace of revelation for uncommon maladies and complex conditions.

Regulatory and Legitimate Clarity

The industry is moving toward a future where “secure by plan” is not discretionary. As these five approaches gotten to be standard, administrative bodies will likely formalize their consideration in compliance reviews. This will bring much-needed lawful clarity and responsibility, characterizing who is capable when an AI framework makes an mistake or is compromised.

The way to completely realizing AI’s potential in healthcare is full with information security dangers. Be that as it may, the five approaches—Federated Learning, Differential Protection, Homomorphic Encryption, Engineered Information, and Zero Believe Architecture—offer a vigorous guide. By grasping these secure, privacy-preserving innovations, healthcare teach can certainly use the insights of AI whereas furiously guarding the holiness of understanding information.